Orange: Neural Network: Difference between revisions

From OnnoCenterWiki

Jump to navigationJump to search

Onnowpurbo (talk | contribs) No edit summary |

Onnowpurbo (talk | contribs) No edit summary |

||

| Line 6: | Line 6: | ||

Data: input dataset | Data: input dataset | ||

Preprocessor: preprocessing method(s) | Preprocessor: preprocessing method(s) | ||

| Line 12: | Line 11: | ||

Learner: multi-layer perceptron learning algorithm | Learner: multi-layer perceptron learning algorithm | ||

Model: trained model | Model: trained model | ||

The Neural Network widget uses sklearn’s Multi-layer Perceptron algorithm that can learn non-linear models as well as linear. | The Neural Network widget uses sklearn’s Multi-layer Perceptron algorithm that can learn non-linear models as well as linear. | ||

[[File:NeuralNetwork-stamped.png|center|200px|thumb]] | |||

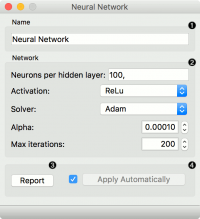

A name under which it will appear in other widgets. The default name is “Neural Network”. | A name under which it will appear in other widgets. The default name is “Neural Network”. | ||

Set model parameters: | Set model parameters: | ||

Neurons per hidden layer: defined as the ith element represents the number of neurons in the ith hidden layer. E.g. a neural network with 3 layers can be defined as 2, 3, 2. | Neurons per hidden layer: defined as the ith element represents the number of neurons in the ith hidden layer. E.g. a neural network with 3 layers can be defined as 2, 3, 2. | ||

Activation function for the hidden layer: | Activation function for the hidden layer: | ||

Identity: no-op activation, useful to implement linear bottleneck | Identity: no-op activation, useful to implement linear bottleneck | ||

Logistic: the logistic sigmoid function | Logistic: the logistic sigmoid function | ||

tanh: the hyperbolic tan function | tanh: the hyperbolic tan function | ||

ReLu: the rectified linear unit function | ReLu: the rectified linear unit function | ||

Solver for weight optimization: | Solver for weight optimization: | ||

L-BFGS-B: an optimizer in the family of quasi-Newton methods | L-BFGS-B: an optimizer in the family of quasi-Newton methods | ||

SGD: stochastic gradient descent | SGD: stochastic gradient descent | ||

Adam: stochastic gradient-based optimizer | Adam: stochastic gradient-based optimizer | ||

Alpha: L2 penalty (regularization term) parameter | Alpha: L2 penalty (regularization term) parameter | ||

Max iterations: maximum number of iterations | Max iterations: maximum number of iterations | ||

Other parameters are set to sklearn’s defaults. | Other parameters are set to sklearn’s defaults. | ||

Produce a report. | Produce a report. | ||

When the box is ticked (Apply Automatically), the widget will communicate changes automatically. Alternatively, click Apply. | When the box is ticked (Apply Automatically), the widget will communicate changes automatically. Alternatively, click Apply. | ||

==Contoh== | |||

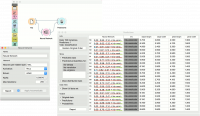

The first example is a classification task on iris dataset. We compare the results of Neural Network with the Logistic Regression. | The first example is a classification task on iris dataset. We compare the results of Neural Network with the Logistic Regression. | ||

[[File:NN-Example-Test.png|center|200px|thumb]] | |||

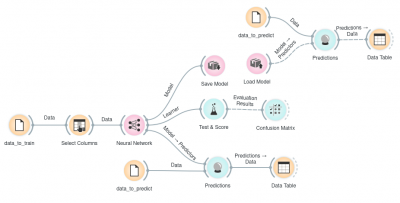

The second example is a prediction task, still using the iris data. This workflow shows how to use the Learner output. We input the Neural Network prediction model into Predictions and observe the predicted values. | The second example is a prediction task, still using the iris data. This workflow shows how to use the Learner output. We input the Neural Network prediction model into Predictions and observe the predicted values. | ||

[[File:NN-Example-Predict.png|center|200px|thumb]] | |||

Contoh WorkFlow lainnya, | |||

Revision as of 03:05, 23 January 2020

Sumber:https://docs.biolab.si//3/visual-programming/widgets/model/neuralnetwork.html

A multi-layer perceptron (MLP) algorithm with backpropagation.

Inputs

Data: input dataset Preprocessor: preprocessing method(s)

Outputs

Learner: multi-layer perceptron learning algorithm Model: trained model

The Neural Network widget uses sklearn’s Multi-layer Perceptron algorithm that can learn non-linear models as well as linear.

A name under which it will appear in other widgets. The default name is “Neural Network”.

Set model parameters:

Neurons per hidden layer: defined as the ith element represents the number of neurons in the ith hidden layer. E.g. a neural network with 3 layers can be defined as 2, 3, 2.

Activation function for the hidden layer:

Identity: no-op activation, useful to implement linear bottleneck

Logistic: the logistic sigmoid function

tanh: the hyperbolic tan function

ReLu: the rectified linear unit function

Solver for weight optimization:

L-BFGS-B: an optimizer in the family of quasi-Newton methods

SGD: stochastic gradient descent

Adam: stochastic gradient-based optimizer

Alpha: L2 penalty (regularization term) parameter

Max iterations: maximum number of iterations

Other parameters are set to sklearn’s defaults.

Produce a report.

When the box is ticked (Apply Automatically), the widget will communicate changes automatically. Alternatively, click Apply.

Contoh

The first example is a classification task on iris dataset. We compare the results of Neural Network with the Logistic Regression.

The second example is a prediction task, still using the iris data. This workflow shows how to use the Learner output. We input the Neural Network prediction model into Predictions and observe the predicted values.

Contoh WorkFlow lainnya,